I own a homelab server based on a Supermicro X10SDV motherboard. I haven't been paying attention to the latest IPMI firmware updates lately and that's a real shame. In June, Supermicro finally released the update that enables iKVM over HTML5. You read that right, no more Java!

On the main page for my motherboard (http://www.supermicro.com/products/motherboard/Xeon/D/X10SDV-6C-TLN4F.cfm) I was able to download the fresh version 3.58 of Redfish.

Since my homelab is running Microsoft Server 2016 at the moment, installing this firmware is a breeze. In the downloaded ZIP file you'll find a simple firmware flash utility.

I simply copied the .BIN file into the utility folder and ran the executable with the right parameters (in an elevated command prompt, just to be sure). Since it's a local KCS connection, there are no authentication or network transfer issues.

The installation itself took a few minutes and after that, you will be able to use the shiny new HTML5 console!

To show you what this looks like, the picture below displays the remote console as well as the command used to update the firmware.

And before you ask: Yes, this works on a mobile device! Here are some screenshots of my Android phone using the HTML5 console.

Any reason get rid of Java is a reason for cake! I really dislike those Ask! toolbars.

So far I haven't run in to any issues so I recommend any and all users of a X10 generation Supermicro motherboard to go and check if the IPMI firmware update is available for them.

01 September 2017

11 June 2017

Easy storage benchmark script based on Diskspd

Intro

Most of the projects I do for work have some part of storage and virtualisation in them. In order to get a good feeling for what a certain storage platform can deliver, I try to run at least one benchmark. In the past, it has been a pain to get the benchmarks right to be able to compare the results. I sometimes forget which tool I used last. The storage is not always easily accessible to the tool and some tools end up overloading the CPU.Diskspd to the rescue

I've been following the development of Diskspd (https://github.com/Microsoft/diskspd) with interest ever since I saw a demo in some Storage Space talk on some Microsoft conference where it was described as an internal loadtest tool meant to replace SQLIO. Diskspd is easy to use, gives consistent results and is customisable for the type of workload you're trying to mimic.

It's commandline based so it runs on almost any version of Windows (even Hyper-V server and Nano server). Being CLI based means it's easy to script and others have built great scripts to run a benchmark based on all sorts of settings.

It's commandline based so it runs on almost any version of Windows (even Hyper-V server and Nano server). Being CLI based means it's easy to script and others have built great scripts to run a benchmark based on all sorts of settings.

Putting it all together

The blog post by Jose Baretto (https://blogs.technet.microsoft.com/josebda/2015/07/03/drive-performance-report-generator-powershell-script-using-diskspd-by-arnaud-torres/) really inspired me to try a more diverse approach to benchmarking with lots of different settings in order to generate a "fingerprint" of sorts for any given storage system. This script is not meant to give an in depth view of a storage system's performance for your particular workload but will make it possible to compare different systems and their strong/weak points with a single worker and limited differentiating workloads.In short: a great way to get a ballpark figure for a storage system.

Description of the script

The script works by asking for a few parameters:

- location to store the test file

- size of the test file (at least a few times the cache size)

- duration of each iteration (I use 60 seconds for a standard test run)

A number of parameters for the iterations are hardcoded into the script

- threadcount = the number of cores that are available to the VM/host where the benchmark is running

- queue depth = we will run all tests with a queue depth of 1, 8, 16 and 32 outstanding IOs

- blocksize = we will run all tests with a blocksize of 4k, 8k, 64k and 512k

- read/write ratio = we will run all tests with a read/write ratio of 100/0, 70/30 and 0/100

- random/sequential = we will run all tests with both random and sequential IO

- repeat = to make sure the test iterations are somewhat representative, we will run four iterations with the same parameters in a row

As you can see, this list adds up to quite a number of iterations: 384 of them. As each iteration needs 60 seconds to run, this takes a lot of time so it's not something you run during your lunchbreak.

The last part of the script handles some formatting to get all the relevant numbers on one line (so it's easy to store as a CSV file later on) and outputs to console and file.

Related automated benchmark: VMFleet

The other tool that Microsoft released on the same Github page is VMFleet. This script launches a number of VMs and kicks off a DiskSPD worker in them. Since most hyperconverged or active-active storage solutions are able to handle multiple IO streams at once, this is a great way to (synthetically) loadtest a storage system that can handle a large number of simultaneous workloads.The code itself

# Drive performance Report Generator # Original by Arnaud TORRES, Edited by Hans Lenze Kaper on 25 - sep - 2015 # Clear screen Clear-host write-host "DRIVE PERFORMANCE REPORT GENERATOR" -foregroundcolor green write-host "Script will stress your computer CPU and storage layer (including network if applicable!), be sure that no critical workload is running" -foregroundcolor yellow # Disk to test $Disk = Read-Host 'Which path would you like to test? (example - C:\ClusterStorage\Volume1 or \\fileserver\share or S:) Without the trailing \' # Reset test counter $counter = 0 # Use 1 thread / core $Thread = "-t"+(Get-WmiObject win32_processor).NumberofCores # Set time in seconds for each run # 10-120s is fine $TimeInput = Read-Host 'Duration: How long should each run take in seconds? (example - 60)' $Time = "-d"+$TimeInput # Choose how big the benchmark file should be. Make sure it is at least two times the size of the available cache. $capacity = Read-Host 'Testfile size: How big should the benchmark file be in GigaBytes? At least two times the cache size (example - 100)' $CapacityParameter = "-c"+$Capacity+"G" # Get date for the output file $date = get-date # Add the tested disk and the date in the output file "Command used for the runs .\diskspd.exe -c[testfileSize]G -d[duration] -[randomOrSequential] -w[%write] -t[NumberOfThreads] -o[queue] -b[blocksize] -h -L $Disk\DiskStress\testfile.dat, $date" >> ./output.txt # Add the headers to the output file "Test N#, Drive, Operation, Access, Blocks, QueueDepth, Run N#, IOPS, MB/sec, Latency ms, CPU %" >> ./output.txt # Number of tests # Multiply the number of loops to change this value # By default there are : (4 queue depths) x (4 blocks sizes) X (3 for read 100%, 70/30 and write 100%) X (2 for Sequential and Random) X (4 Runs of each) $NumberOfTests = 384 write-host "TEST RESULTS (also logged in .\output.txt)" -foregroundcolor yellow # Begin Tests loops # We will run the tests with 1, 8, 16 and 32 queue depth (1,8,16,32) | ForEach-Object { $queueparameter = ("-o"+$_) $queue = ("QueueDepth "+$_) # We will run the tests with 4K, 8K, 64K and 512K block (4,8,64,512) | ForEach-Object { $BlockParameter = ("-b"+$_+"K") $Blocks = ("Blocks "+$_+"K") # We will do Read tests, 70/30 Read/Write and Write tests (0,30,100) | ForEach-Object { if ($_ -eq 0){$IO = "Read"} if ($_ -eq 30){$IO = "Mixed"} if ($_ -eq 100){$IO = "Write"} $WriteParameter = "-w"+$_ # We will do random and sequential IO tests ("r","si") | ForEach-Object { if ($_ -eq "r"){$type = "Random"} if ($_ -eq "si"){$type = "Sequential"} $AccessParameter = "-"+$_ # Each run will be done 4 times for consistency (1..4) | ForEach-Object { # The test itself (finally !!) $result = .\diskspd.exe $CapacityPArameter $Time $AccessParameter $WriteParameter $Thread $queueparameter $BlockParameter -h -L $Disk\TestDiskSpd\testfile.dat # Now we will break the very verbose output of DiskSpd in a single line with the most important values foreach ($line in $result) {if ($line -like "total:*") { $total=$line; break } } foreach ($line in $result) {if ($line -like "avg.*") { $avg=$line; break } } $mbps = $total.Split("|")[2].Trim() $iops = $total.Split("|")[3].Trim() $latency = $total.Split("|")[4].Trim() $cpu = $avg.Split("|")[1].Trim() $counter = $counter + 1 # A progress bar, for fun Write-Progress -Activity ".\diskspd.exe $CapacityPArameter $Time $AccessParameter $WriteParameter $Thread $queueparameter $BlockParameter -h -L $Disk\TestDiskSpd\testfile.dat" -status "Test in progress" -percentComplete ($counter / $NumberofTests * 100) # We output the values to the text file “Test $Counter,$Disk,$IO,$type,$Blocks,$queue,Run $_,$iops,$mbps,$latency,$cpu" >> ./output.txt # We output a verbose format on screen “Test $Counter, $Disk, $IO, $type, $Blocks, $queue, Run $_, $iops iops, $mbps MB/sec, $latency ms, $cpu CPU" } } } } }

14 April 2017

Pinging a subnet range using PowerShell

Every once in a while I come across a network with sub-optimal documentation. I usually want to add a new device to the network without having to hunt for a free IP address. One of the simple tests to see if an IP address is in use, is sending a ping. You can use a network scanner to ping an entire IP subnet or you can script something yourself. This is my PowerShell based script that I use in these cases:

# Ping an IP range # based on PoshPortScanner.ps1 (https://blogs.technet.microsoft.com/heyscriptingguy/2014/03/19/creating-a-port-scanner-with-windows-powershell/) $Net = "192" $Brange = "168" $Crange = 2..8 $Drange = 1..254 $Logfile = C:\users\Pietje\Desktop\ping-output.txt foreach ($B in $Brange) { foreach ($C in $Crange) { foreach ($D in $Drange) { $ip = “{0}.{1}.{2}.{3}” -F $Net,$B,$C,$D if(Test-Connection -BufferSize 32 -Count 1 -Quiet -ComputerName $ip) { “$ip, responding to ping” >> $Logfile} } } }

21 March 2017

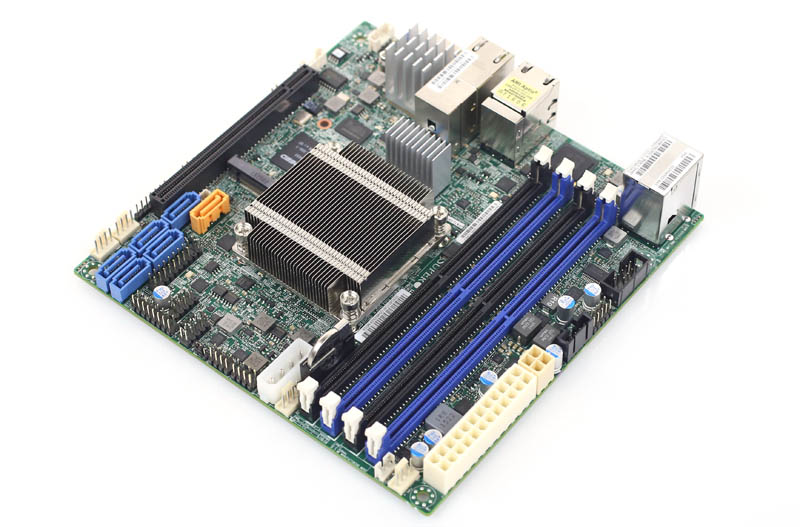

Supermicro X10SDV CPU cooling

My homelab server uses a Supermicro X10SDV-6c-TLN4F motherboard that does not come with a CPU fan because it's meant to be screwed into a 1u chassis with its own fans. There's a low heatsink on the CPU to keep it cool using the chassis fans. The X10SDV-6c+-TLN4F does have a little CPU fan on the heatsink but was not available at the webshop where I bought the homelab server.

Silence

I did not buy myself a 1u chassis but a Supermicro Super-chassis CSE-721TQ-250B micro tower. This nice steel chassis offers a bit more storage options and thanks to the huge fan in the back, it's near silent.

This fan is mostly there to keep the four 3,5" drivebays cool and it's placed too high on the back of the chassis to add a significant airflow over the tiny heatsink on the CPU. With all the extra space around and above the heatsink, it gets barely any cooling at all.

Heat

The low heatsink requires a lot of moving air to keep the CPU at a reasonable temperature. For example: Using no fan on the CPU heatsink means I must finish my calculations within three minutes or the CPU moves into thermal shutdown range. This makes using the little server no fun. A single Windows installation makes the CPU overheat and causes the whole server to power off. My collegue already warned me about this before I bought the server so I knew I had to create more airflow over the heatsink.

Old stuff

Because I like pragmatic solutions, I decided to use a fan I had lying around since that's the cheapest and fastest solution. A bigger fan can move the same amount of air while making less RPM. So I grabbed the biggest PWM fan from the drawer filled with old computer stuff. It was actually a boxed cooler of some sort.

I don't remember ever owning an AMD desktop but I sure was happy to find this fan.

The attached heatsink is far to big to be mounted on the X10SDV motherboard so that had to go. Someday I may need it, so it's back in the drawer. Yes, I keep way to much junk. But look, sometimes it's very usefull to keep a heap of old stuff!

Let it fly

Having selected a big fan, there's no way to mount it on the tiny heatsink on the motherboard. I decided to add to the "front-to-back" airflow and keep some hot components near the CPU cool too. I suspended the fan in a diagonal manner, shown in the picture below.

That's right, the fan is hanging from the drivecage with two tie-wraps. Some times I fear one of the cables will end up in the blades but so far, none have. The fan pushes air around and into the heatsink and up the backside of the chassis, where it's extracted by the main case fan.

Cool and silent

So does it work? Yes it does! It keeps the CPU nice and cool and it adds some airflow over the rest of the components on the motherboard near the CPU. The NVMe SSD, BMC and the network controller get to experience a nice cool breeze.

FAN1 is the CPU fan and FAN2 is the case fan. Both are BIOS controlled and spin up when needed. I've never actually heard the fans spin up during use. Just once during testing (blowing hot air into the chassis with a hair dryer to make sure it worked).

Silence

I did not buy myself a 1u chassis but a Supermicro Super-chassis CSE-721TQ-250B micro tower. This nice steel chassis offers a bit more storage options and thanks to the huge fan in the back, it's near silent.

This fan is mostly there to keep the four 3,5" drivebays cool and it's placed too high on the back of the chassis to add a significant airflow over the tiny heatsink on the CPU. With all the extra space around and above the heatsink, it gets barely any cooling at all.

Heat

The low heatsink requires a lot of moving air to keep the CPU at a reasonable temperature. For example: Using no fan on the CPU heatsink means I must finish my calculations within three minutes or the CPU moves into thermal shutdown range. This makes using the little server no fun. A single Windows installation makes the CPU overheat and causes the whole server to power off. My collegue already warned me about this before I bought the server so I knew I had to create more airflow over the heatsink.

Old stuff

Because I like pragmatic solutions, I decided to use a fan I had lying around since that's the cheapest and fastest solution. A bigger fan can move the same amount of air while making less RPM. So I grabbed the biggest PWM fan from the drawer filled with old computer stuff. It was actually a boxed cooler of some sort.

I don't remember ever owning an AMD desktop but I sure was happy to find this fan.

The attached heatsink is far to big to be mounted on the X10SDV motherboard so that had to go. Someday I may need it, so it's back in the drawer. Yes, I keep way to much junk. But look, sometimes it's very usefull to keep a heap of old stuff!

Let it fly

Having selected a big fan, there's no way to mount it on the tiny heatsink on the motherboard. I decided to add to the "front-to-back" airflow and keep some hot components near the CPU cool too. I suspended the fan in a diagonal manner, shown in the picture below.

That's right, the fan is hanging from the drivecage with two tie-wraps. Some times I fear one of the cables will end up in the blades but so far, none have. The fan pushes air around and into the heatsink and up the backside of the chassis, where it's extracted by the main case fan.

Cool and silent

So does it work? Yes it does! It keeps the CPU nice and cool and it adds some airflow over the rest of the components on the motherboard near the CPU. The NVMe SSD, BMC and the network controller get to experience a nice cool breeze.

FAN1 is the CPU fan and FAN2 is the case fan. Both are BIOS controlled and spin up when needed. I've never actually heard the fans spin up during use. Just once during testing (blowing hot air into the chassis with a hair dryer to make sure it worked).

Subscribe to:

Posts (Atom)